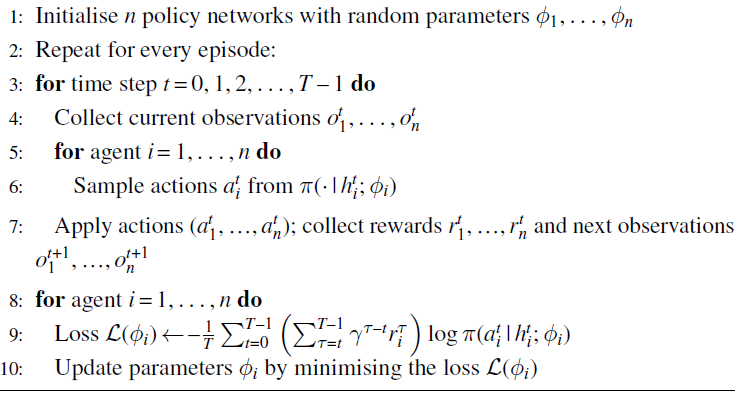

- A naive approach in this regard would be to directly apply the techniques from Function Approximation and PGMs to each agent without making use of joint information.

- 1 provides a comprehensive empirical comparison for MARL algorithms for Cooperative multi-agent tasks

- MARL algorithms typically require dense rewards to work properly. Centralized Training Decentralized Execution seems to be more appropriate for environments with sparse rewards

Independent Learning

Independent DQN

-

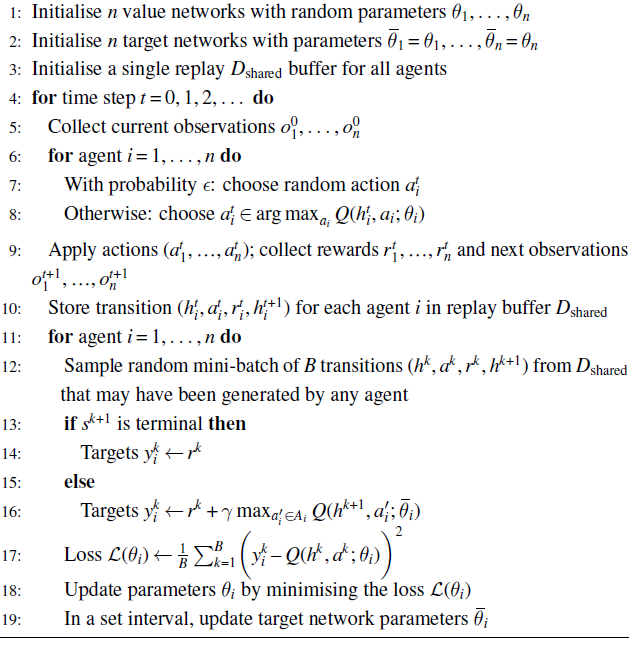

An extension of DQN but applied to multiple agents. Here, each agent optimizes the loss function using its local observation history from batch

. -

One challenge is that the replay buffer has to be modified since agents in IL do not distinguish between the non-stationarity caused by the environment and that by the other agents.

- This means that the agent can receive different rewards for performing the same action at the same state

- Additionally, because the agents’ policies update, the information in the replay buffer can become outdated .

- This can be solved in a few ways:

- By making the replay buffer smaller

- Using Importance Sampling to re-weight the experiences in the buffer to account for changing policies and correcting the non-stationarity.

- Hysteretic Q-Learning uses a smaller learning rate so that decreasing estimates will reduce the stochasticity of the other agent’s policies

- Use leniency ignores decreasing updates of action-value estimates with a given probability that decreases throughout training.

IPGM

- Compute the gradient with respect to its own policy parameters and then apply the Policy Gradient Theorem

- On policy algorithms have an advantage over off-policy approaches since the policy gradient is computed based on recent experiences generated by the current policy.

- It can be made asynchronous (such as A2C).

- We can also use PPO.

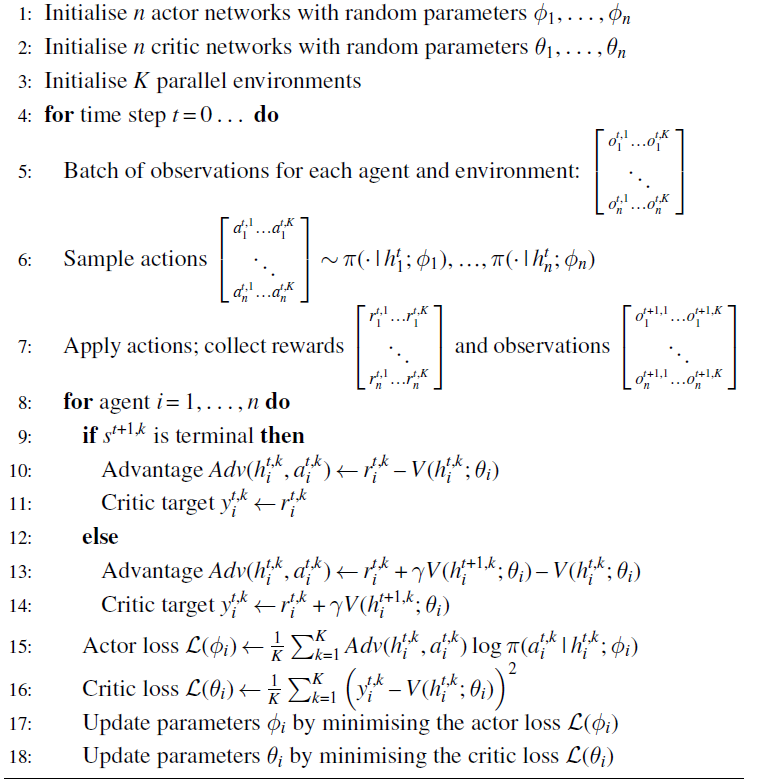

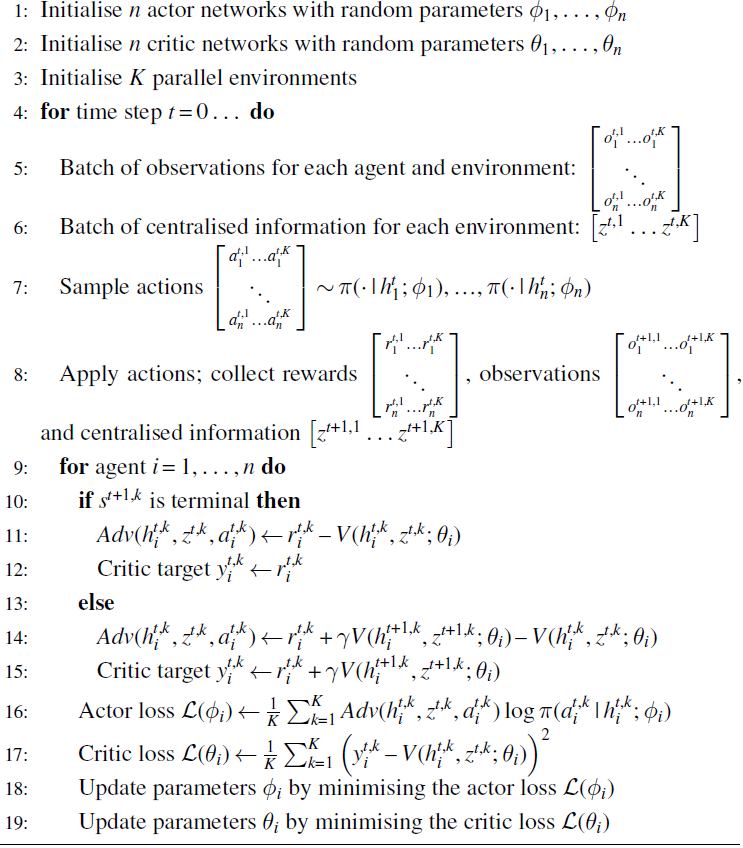

Multi-Agent PGMs

- The Multi-Agent Policy Gradient Theorem extends the regular Policy Gradient Theorem by considering all of the agent’s policies.

- We can make use of a centralized critic 2 that is conditioned on information beyond local information.

- The centralized critic is optimized using Semi-Gradient descent.

- At minimum, we need to condition the critic on the agent’s observation history

. - Any additional information may introduce noise, and increase variance, so it is subject to something akin to the bias-variance tradeoff.

- In practice, we use state history + local observation history.

- This makes it more robust against non-stationarity.

- Any Independent Learning algorithm can be instantiated with a centralized critic to learn a value function.

Action Value Critics and COMA

-

The centralized critic can make use of action-values

as well. which is conditioned on local observation history, centralized information information, and the joint action. -

The gradient update for the policy then becomes

-

We make use of on-policy data rather than off-policy data.

-

In practice, rather than have many action-values for each joint action (with high dimensionality at scale), we instead output the action value given the current joint action (which scales linearly to the number of agents)

-

-

We can use action-value critics for the multi-agent credit assignment problem using difference rewards to approximate the difference in reward if the agent took action

instead. We call the default action -

In practice, determining the default action is difficult, so we use the aristocratic utility which measures if the chosen action performs better than a random action sampled from the policy

-

-

Counterfactual Multi-agent Policy Gradient (COMA) estimates the advantage using a counterfactual baseline computed using the aristocratic utility. This enables more efficient computation at the cost of higher variance

Pareto-AC

-

Pareto-AC is an approach where the goal is to learn a Pareto-optimal equilibrium in no-conflict games. This is done by incorporating the inductive bias that all other agents prefer the same outcome. That is, assume all other agents follow the policy

which maximizes its reward (or in training, its -value). That is -

The loss function for the policy turns out to be

-

Pareto-AC suffers from inefficiencies as agent count increases

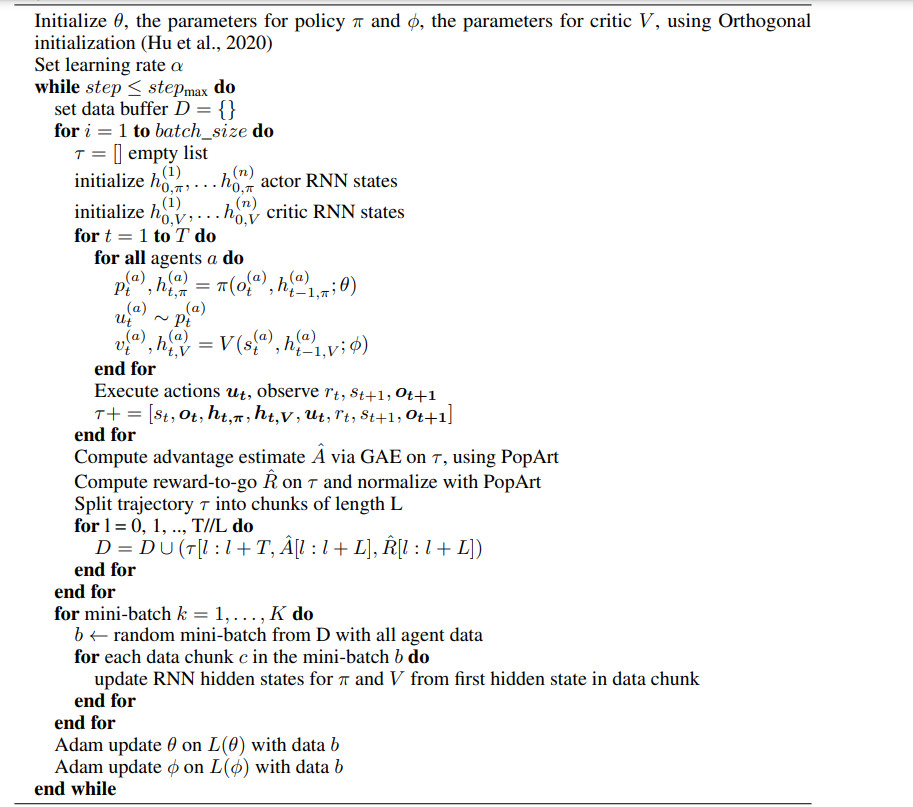

MAPPO

- Multi Agent PPO proposed by 3. PPO-based multi-agent algorithms achieve strong performance for multi-agent testbeds. PPO achieves good final returns with good sample efficiency for common reward games (i.e., cooperative settings)

- The success of MAPPO can, according to 3 be attributed to the following:

- Value normalization - by standardizing the value targets using running estimates of the mean and standard deviation of the value targets, instabilities due to value targets are mitigated.

- Input representation - MAPPO blends two approaches to input representation (namely (1) concatenating local observations as global state and (2) global information provided by the environment). It allows the agent to concatenate its local observation state with the global observation state. It is important to make sure that the input dimension does not blow up as a result.

- Training Data Usage: Empirical tests suggest that MAPPO’s performance degrades when samples are reused too often.

- This may be because of non-stationarity in MARL — using fewer epochs per update limits the change in agent policies.

- Also using more data to estimate gradients typically leads to improved practical performance

- As recommended by the paper: Use at most

training epochs on difficult environments and training epochs on easy environments. Additionally, avoid splitting data into mini-batches.

- PPO Clipping: Clipping prevents the policy and value functions from changing too much. It is (hypothesized) to limit non-stationarity.

- PPO batch size: A larger batch generally will result in more accurate gradients, yielding better updates to the value functions and policies.

-

In the case of heterogeneous agents, each agent can have its own actor and critic networks.

-

The actor objective is to maximize the following

Where

is the policy entropy and the entropy coefficient parameter. denotes the batch size and the number of agents. Also

And also

is computed using the General Advantage Estimator method -

The critic objective is to maximize the following

Where

is the discounted reward to go

Homogeneous Agents

Parameter Sharing

-

A method where each agent uses the same set of parameter values in their neural networks. That is, we have either of the following (or both)

-

This allows for a constant number of parameters regardless of agent count and it also allows for parameters to be updated by a broader set of experiences.

-

Assumption: The environment has strongly homogeneous agents.

-

We can get around the strong assumption of strongly homogeneous agents by using agent indexing where agents index their observations 4. In practice, this is not sufficient

Experience Sharing

-

Each agent has different parameters, but the parameters are trained on the same set of experiences.

-

For algorithms that use DQN, we may use a shared replay buffer.

-

Assumption: The environment has weakly homogeneous agents.

-

It has the following advantages over parameter sharing :

- This allows parameters to be trained on more diverse data

- It retains the flexibility of each agent to adopt its own policy (which has been shown to yield higher returns).

- It is more sample efficient

- It leads to agents having a uniform learning progression since agents that perform poorly have access to the experiences of the best agents.

- Agents can better coordinate and are more efficient, especially at data collection.

-

This requires storing more experiences in the buffer.

-

This is much easier to do with off-policy than on-policy approaches. On policy approaches require the use of Importance Sampling. We do this by imposing a loss function as follows

-

We can also weigh the experiences of other agents differently to the current agent. We do this using a hyperparameter

. The loss function is as follows

Other Techniques

- Agent Modeling - can also be used for deep learning so that each agent has a model of the other agents in the environment

- Decision Time Planning - approaches such as MCTS can be generalized to a deep-learning setting.

- Value Reward Decomposition - approaches that involve decomposing the joint value reward function to something simpler (i.e., based on the local value reward function for each agent)

- Mean Field MARL

- Heterogeneous MARL

- Communication with MARL

Links

-

Albrecht, Christianos, and Schafer - Ch. 9

- 9.5.3 - proof of the sufficiency of monotonicity to ensure IGM.

Footnotes

-

Papoudakis, Christianoos, Schafer, and Albrech (2021) Benchmarking Multi-Agent Deep Reinforcement Learning Algorithms in Cooperative Tasks ↩

-

We don’t need to define a decentralized critic since during inference the critic is never used. ↩

-

: Yu, et. al (2022) The Surprising Effectiveness of PPO in Cooperative Multi-Agent Games ↩ ↩2

-

Analogous to training the models to be few-shot since it encodes many behaviors ↩