-

Proposed by 1

-

Interactions within the population of agents are approximated by those between a single agent and the average effect from the overall population or neighboring agents.

-

It aims to address non-stationarity and convergence of MARL problems and the instability of various MARL approaches.

-

The

function is factorized using only pairwise local interactions. Let be the index set of the neighboring agents of agent as defined by the problem. -

The pairwise interaction

is approximated using Mean Field Theory. Let

be represented using a one-hot encoding of each of the possible actions. The mean action denoted

based on neighborhood is defined as follows Where

is a small perturbation to the mean action. -

The

function can then be expressed as Where

Essentially

acts as a random variable which serves as a small perturbation near zero. -

Assuming all agents are homogeneous, the remainders cancel and give us the following approximation

That is the pairwise interactions are simplified to be between

and a virtual mean agent (from the mean effect of all of ’s neighbors). -

The mean-field

function is updated as follows -

The MARL problem is thus converted to finding

’s best response with respect to the mean action of all its neighbors. -

The policy

can be defined using the virtual mean agent as -

For Policy Gradient Methods we minimize the loss function

Where

is the target mean value.

The gradient is then given by

-

For actor critic methods, the gradient of the actor is trained with the gradient

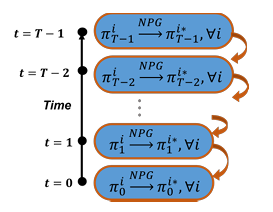

- 2 introduces a mean field approach to the problem of cooperative team-based MARL with infinitely many agents — The General-Sum LQ Mean-Field Type Game (GS-MFTG). The paper also develops the corresponding Multi-Player Receding-Horizon Natural Policy Gradient (MRPG)

-

Assume The LQ setting where agent dynamics are linear and costs are quadratic. This is to simplify things.

Agents are grouped into

teams. Each team has agents and team in team has linear dynamics — that is, it is driven by a linear function of the agent state, action, and mean state and actions of population . We term this setting as Cooperating-Competing (CC). In particular, the dynamics in the setting is given by Where each

Actions for the

The objective is the following for the team (and the

We also assume the mean field setting. The game itself is termed a Mean Field Type Game with the GS-MFTG its limit.

We denote

We will also use, for notation,

-

Define

-

The Nash Equilibrium of the MFTG is an

-

We decompose the MFG into two parts

Where

The above shows that we can decouple the dynamics in the setting as the mean-field setting and the deviation from the mean-field.

-

-

We use the Hamilton-Jacobi-Isaac equations to solve the Nash Equilibrium:

For

In fact, because of the LQ conditions,

-

The key idea is as follows For the deviation dynamics: find the policies for all agents at a fixed time

For the mean field dynamics: find the policies for all agents at a fixed time

-

At each time step

For

-

The algorithm proceeds using gradient descent. In particular, we use the Natural Policy gradient. In particular, if we assume that

Where the gradients are calculated as follows (the calculation for

Where

is a controller set perturbed at time

-

-

-

3 gives an approximation bound for applying Mean Field Control to the case of cooperative Heterogeneous MARL problems where we have a collection of

The bounds are given below, dependent on what the reward and transition dynamics of all agents are a function of:

- The joint state and action distributions across all classes

- The individual distributions of each class

- The marginal distribution of the entire population. We define

- The joint state and action distributions across all classes

Footnotes

-

Yang et al. (2018) Mean Field Multi-Agent Reinforcement Learning ↩

-

Zaman, Koppel, Lauriere, and Basar (2024) Independent RL for Cooperative-Competitive Agents: A Mean-Field Perspective ↩

-

Mondal et al. (2021) On the Approximation of Cooperative Heterogeneous Multi-Agent Reinforcement Learning (MARL) using Mean Field Control (MFC) ↩