- A Recurrent Neural Network is a class of Neural Network where the computational graph may contain cycles.

- They are best suited for sequential tasks especially since, unlike vanilla neural networks, they do not have limited context i.e., for the same amount of weights as a regular neural network, we can in theory process an infinite length sequence by using hidden states.

- Note that the context window itself is finite and in practice small.

Architectural Details

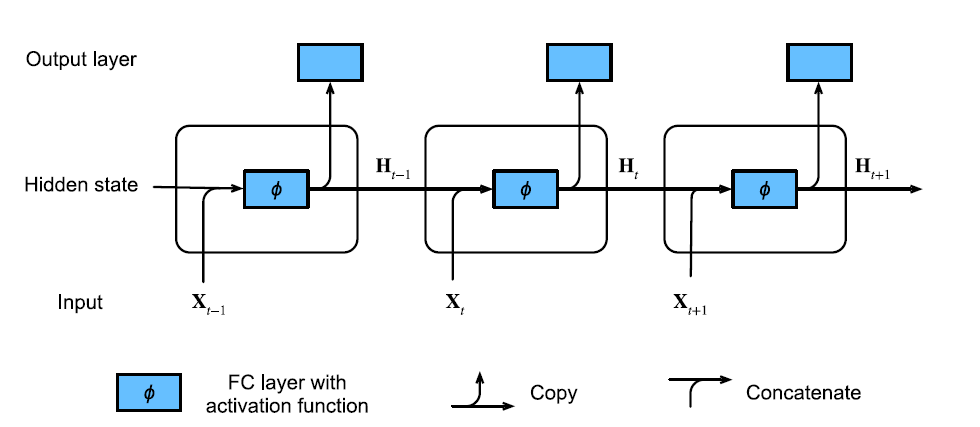

- A hidden state is a state which is not necessarily observed, but which holds some form of latent representation about the inputs. Typically ,it is used to aggregate sequential data.

-

We use hidden states to avoid having to store many parameters since we are looking at the input’s values at

time steps away. Let

denote the hidden state at time step and as the input. We calculate the hidden state as

That is, for a RNN, we want the current state to be dependent on the previous state. However, unlike the Markov Property, we actually do retain some information about all previously seen states so far.

-

-

We make use of Recurrent Layers. These are layers which use hidden states obtained from previous computations.

More formally, Let

be the input at time step . be the hidden layer output of time step . be an activation function. We perform the calculation of the output as

- Where

and are weights ,and is a bias term. - The output is then a function of

. Let be an activation function, then the output is given by Wherewhere is the output dimension.

- Where

-

Typical training involves using Backpropagation through Time.