- 1 investigates a MARL setup for a cooperative-competitive task (in this case, microplastic cleanup)

- It makes use of a GNN-based communication system.

- Limitation: It’s very limited in scope. At best, it illustrates how MARL can be applied to a real world problem.

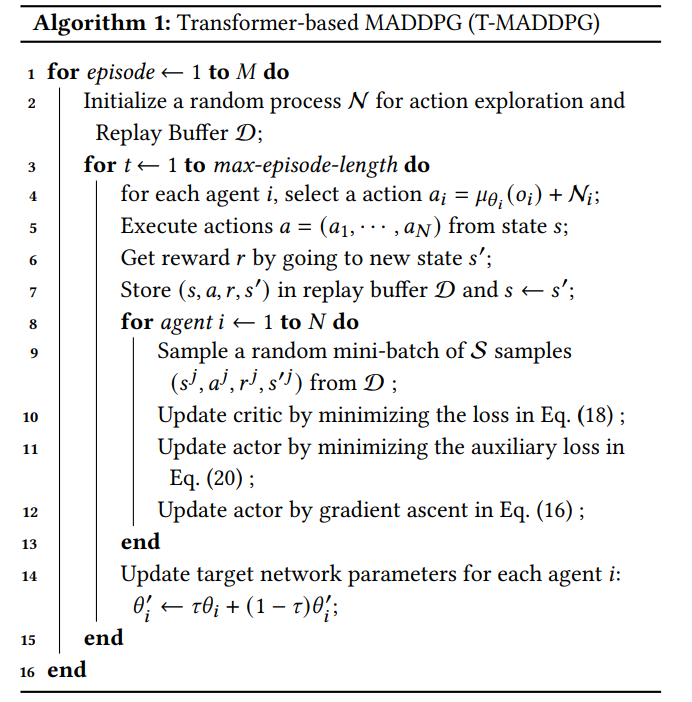

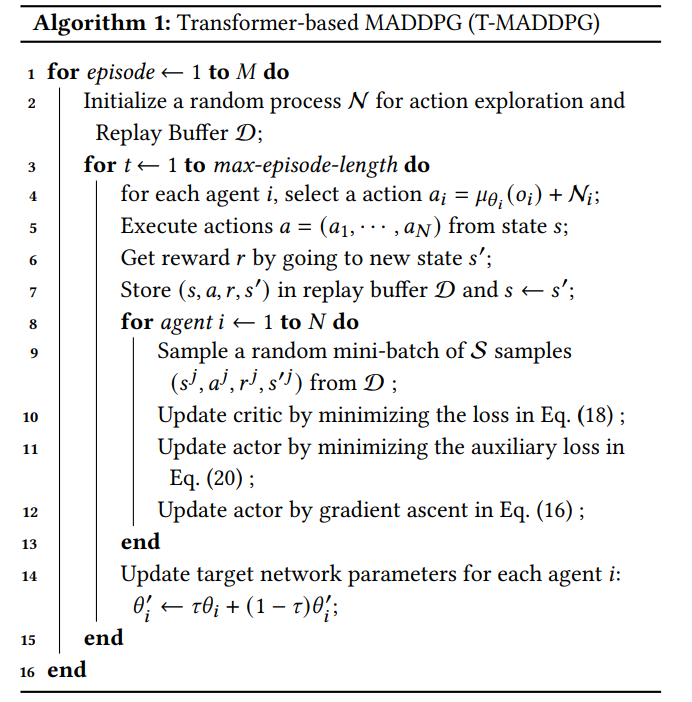

- 2 proposes a Transformer-based multi actor-critic framework for active voltage control. It also aims to stabilize the training process of MARL algorithms with a transformer.

- The network operates on a power distribution network (represented as a graph).

- Raw observations are projected into an embedding space. Since it operates on a graph, it makes use of the adjacency matrix as additional positional information. This information is then fed to a transformer

- A GRU is used to project the representation to an action.

Transformer-Based MADDPG. Image taken from Wang, Zhou Li (2022)