-

Positional Encoding is done to account for the fact the order tokens appear in sequence is important

- The original positional encoding proposed encodes both the absolute position of a token, and its position relative to other tokens. For any fixed offset

, the positional encoding at can be obtained through linear projection of at . - An ideal positional encoding has the following properties 1

- Unique encoding for each position across sequences regardless of sequence length.

- Linear relation between two encoded positions (for simplicity).

- Generalizable to longer sequences than those encountered in training.

- Deterministically generated

- Extensible to multiple dimensions.

- The original positional encoding proposed encodes both the absolute position of a token, and its position relative to other tokens. For any fixed offset

-

Position encoding can either be applied to only the first input, or in every layer. The latter tends to be more performance.

-

The positional encoding

is applied to as follows -

Sinusoidal Positional Embedding constructs the embedding as follows

-

Learned Positional Encoding involves treating

-

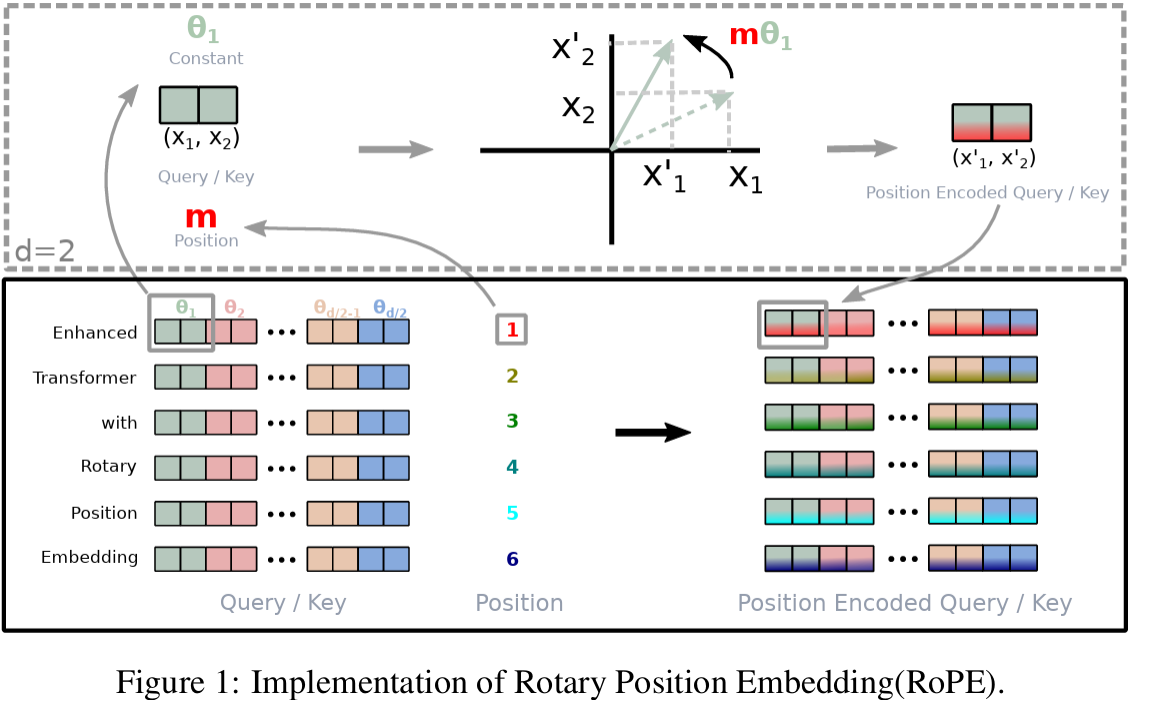

Rotary Position Embedding (RoPE) 2 encodes the absolute position with a rotation matrix while incorporating explicit relative position dependency.

-

We formulate the inner product of

-

Consider the simpler case of

Further assume we have the initial conditions with no position information encoded.

We use Complex numbers to represent the

Where

We can find a solution by setting

One solution could therefore be to set

Furthermore, since

For some function

Also setting

Thus

Let us set

Therefore

Where

-

We can represent the solution using a rotation matrix of the form

-

In the general form where

Where

And

The inner product is therefore

-

For completeness, set

-

Because

-

Notice we do not add position information to the values.

-

One main challenge with RoPE is extrapolation.

-

-

3 proposes a modified relative positional encoding for use in models that have extended context lengths.

We use the following decomposition. Let

We perform the following reparameterization

- Replace

𝕕 - Replace

- Since the query vector is the same for all query positions, the bias should remain the same regardless of query positions.

- Split

- The final reparameterization now looks like

- The first term corresponds to content-based addressing

- The second term corresponds to content-dependent positional bias.

- The third term corresponds to global content bias

- The fourth term correspond to global positional bias

- Replace

- 4 introduces relative positional encoding.

- Rationale: This is one approach to consider arbitrary pairwise relations between any two input tokens. We treat the input as a labeled fully connected digraph.

- The edge between input elements

- We clip the maximum distance to a maximum absolute value

-

Rationale: This lets us generalize to sequence lengths not seen in training. Also it is hypothesized that previse positional information is not useful beyond a certain distance.

-

We consider

Where

-

For efficiency, we share the relative position encoding either across heads or across sequences

-

- When computing the scaled dot product, we separate the computation and perform tensor reshaping on the second term in the following

The computation for the output using

Incorporating Long Contexts

- Attention with Linear Biases (ALiBi) 5 proposes a position encoding method where we add a bias for query-key attention scores with a penalty proportional to their distance.

-

The authors speculate that the failure to extrapolate is due to the choice of positional encoding used.

-

Using ALiBi entails training the model on short sequences. Thus, training incurs lower cost.

-

ALiBi simply entails modifying the attention mechanism by introducing a static, non-learned bias. For the

Where

- The paper uses a geometric sequence for

- The paper uses a geometric sequence for

-

ALiBi has an inductive bias towards recency. The penalty decreases as the distance between a key and query diminishes.

-

ALiBi’s decrease in perplexity when given longer sequences is largely explained by its improved avoidance of the early token curse

-

Limitation: When using a larger context during validation, ALiBi might not actually be using contexts longer than the one it was trained on.

-

- 6 propose the DA-Transformer (distance aware transformer) which incorporates the real distance between tokens in re-scaling raw self-attention weights.

- Rationale: Global and local context modelling usually have different distance preferences for attention.

- In each attention head, we use a learnable parameter

- We then design a function

- The scale of

- Our choice for

- The re-scaled coefficients are used to adjust the attention weights. We obtain the attention matrix as follows

- The scaling is multiplicative since adding might over-amplify the attention weights.

- The ReLU is incorporated since the sign of

- ReLU also adds sparsity since only positive attention is amplified.

- The extra time and space complexity for computing the above is

Links

-

Zhang et. al Ch. 11 - for everything about the basics of the transformer model.

-

All about Attention - more about attention

Footnotes

-

RoPE has all these properties. See https://huggingface.co/blog/designing-positional-encoding ↩

-

Su et al. (2021) RoFormer: Enhanced Transformer with Rotary Position Embedding ↩

-

: Dai et al. (2019) Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context ↩

-

Shaw, Uszkoreit, Vaswani (2018) Self-Attention with Relative Position Representations ↩

-

Press, Smith, Lewis (2021) Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation ↩

-

Wu, Wu, Huang (2021) DA-transformer: Distance Aware Transformer ↩