Soft Q Learning

-

Aims to learn maximum entropy policies 1. It expresses the optimal policy via a Boltzmann distribution (using the property that Boltzmann distributions maximize entropy).

-

Instead of learning the best way to perform the task, the resulting policies try to learn all of the ways of performing the task

-

We reframe the task as finding the optimal policy

such that Where

is the temperature parameter for balancing reward and entropy. -

Policies are represented as

Where

is an energy function. -

(Haarnoja Thm. 1) Let the soft

function and soft function be defined as The optimal policy for maximum entropy RL is given as

-

Soft Bellman equation (Haarnoja Thm. 2)

-

Soft Q-iteration (Haarnoja Thm. 3) The following is a soft version of Bellman Backup

Assume that

and are bounded , and exists Then we can perform the following fixed point iteration to converge to

and -

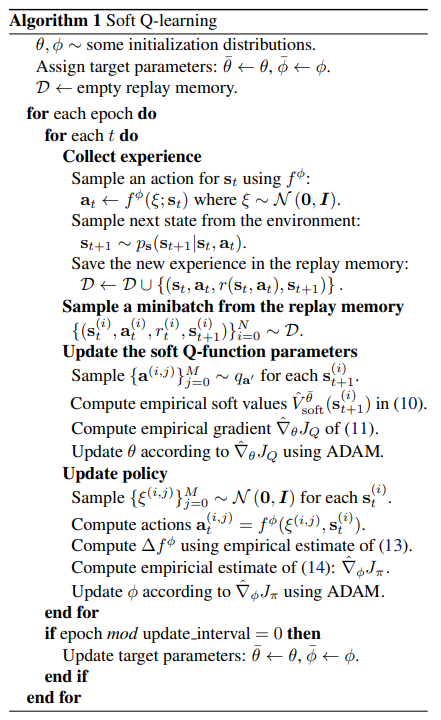

A stochastic version involves optimizing via Importance Sampling using

as an arbitrary distribution over action space. We compute and a general loss function as follows The stochastic version is computed using Approximate Sampling and Stein Variational Gradient Descent

SAC

-

Soft Actor Critic [^Haarnoja _2018] Extends Soft Q Learning by having an actor also maximize both expected reward and entropy. To succeed in performing a task while performing as randomly as possible. This approach is very stable and can achieve SOTA performance on continuous tasks.

- Previous methods such as Trust Region Policies require new samples to be collected for each gradient step, which becomes expensive as tasks become more complex.

- Using DDPG, while sample efficient, is challenging to use due to extreme brittleness and hyperparameter sensitivity.

- Soft Q Learning requires complex approximate inference procedures in continuous action spaces.

-

SAC relies on three components

- An actor-critic architecture with separate policy and value function networks. The actor itself is stochastic.

- An off policy formulation that enables reuse of previously collected data for efficiency

- Entropy maximization to enable stability and exploration.

-

Soft Policy Evaluation (Haarnoja Lem. 1) - Let

where . Let . The sequence converges to the soft value of as . In other words, we can compute the soft value using repeated applications of the soft Bellman operator. -

We restrict the choice of policy

to be in some set of policies . TO that end, we use the KL Divergence. Thus, for each improvement step, we update according to Where

-

Soft Policy Improvement (Haarnoja Lem. 2) Let

-

Soft Policy Iteration (Haarnaja Thm. 1) repeated application of soft policy evaluation and soft policy improvement from any

- In other words, we can generalize GPI for soft RL.

-

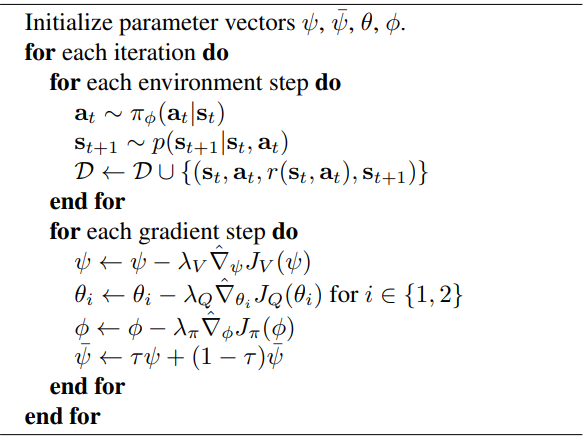

Soft Actor-Critic 2 approximates Soft Policy Iteration by using Function Approximation using

-

A parameterized state value function

Its goal is to minimize the squared residual error using the distribution of previously sampled states and actions

-

A soft

Where

-

A Tractable policy

Optimizing requires reparameterizing the policy using a neural network transformation since the target is a differentiable neural network

-

-

We use two Q-functions in a similar manner to double Q. This speeds up training.

Links

Footnotes

-

Haarnoja, Tang, Abbeel, and Levine (2017). Reinforcement Learning with Deep Energy-based Policies ↩

-

Haarnoja, Zhou, Abbeel and Levine (2018) Soft Actor Critic—Off Policy Maximum Entropy Deep Learning with a Stochastic Actor ↩