DPG

-

Introduces deterministic policy gradient algorithms for RL with continuous actions 1

-

The goal is to learn a deterministic policy from a stochastic behavior policy via an off-policy actor-critic algorithm.

-

Specific to this, let

be a deterministic policy with parameter , and let be the discounted state distribution defined as The objective becomes

-

Deterministic Policy Gradient Theorem.

-

(Silver Th.2) If the stochastic policy

is re-parameterized to deterministic policy and variation variable , the stochastic policy is eventually equivalent to the deterministic case is eventually equivalent to the deterministic case where . However, this will require more samples . More formally Which implies stochastic policy gradient algorithms can be applied to deterministic policy gradient.

On-Policy Deterministic Actor Critic

-

Actor takes actions deterministically

. We then use SARSA update and combine it with the Deterministic Policy Gradient Theorem . -

Disadvantage: No guarantees of optimality when we do not explore enough.

Off-Policy Deterministic Actor-Critic

- The objective is now arranged over the state distribution of the behavior policy

-

The update is then done by performing the same update as the on-policy case.

-

Note: We typically use Importance Sampling when using off-policy methods. However, because the deterministic policy gradient removes the integral over actions, we do not need importance sampling in the actor and the critic.

-

(Silver Th.3) - We can find a critic

such that the gradient can be replaced by without affecting the policy gradient if the following holds:

minimizes the MSE

-

For any deterministic policy, we can take the following as the function approximator

Where

is a baseline function independent of the action. -

A linear function approximator is sufficient to select the direction in which the actor should adjust its policy parameters.

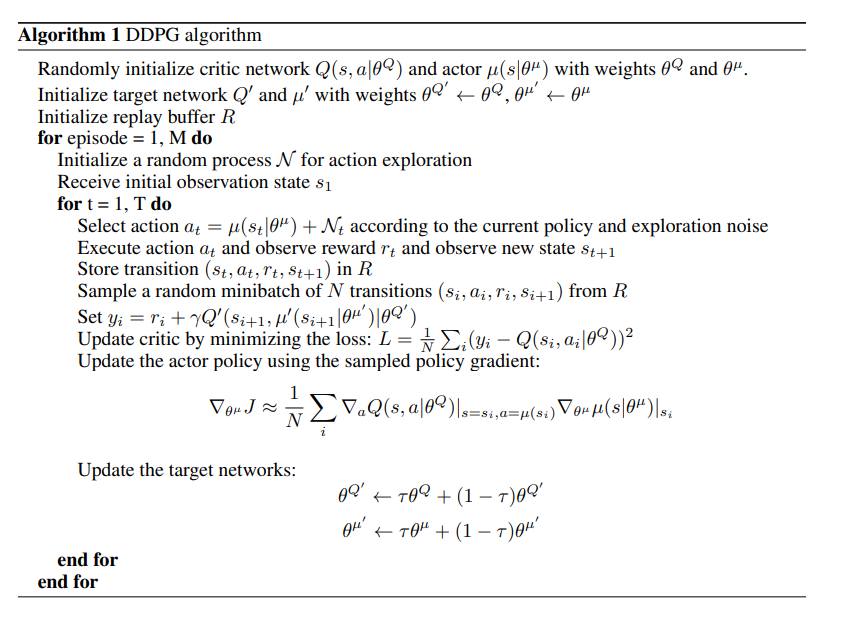

DDPG

-

Deep Deterministic Policy Gradient.

-

Presents an actor-critic model free algorithm using DPGs operating on continuous action spaces. 2.

-

We make use of soft target updates. This is done by creating a copy of the actor and critic networks

and . The weights are then updated by having them slowly track the learned networks. -

This makes target values change slowly and improves stability. At the cost of slowing learning.

-

This also makes use of Batch Normalization to minimize covariance shift during training.

-

To do better exploration, the exploration policy

is constructed 3 by adding noise

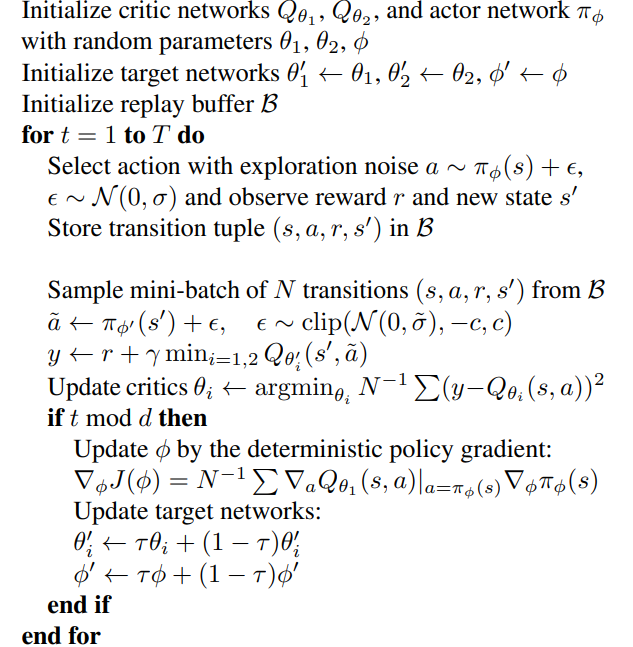

TD3

- Twin Delayed Deep Deterministic 4.

- It aims to address issues with overestimation for Q-learning, which is exaggerated during in a function approximation with bootstrapping setting, behavior which is characteristic of the Deadly Triad. ]

- It proposes the following changes to augment DDPG and to improve on double learning.

-

Clipped Double Q Learning. We make use of two deterministic actors

and two critics . We optimize with respect to and with . Because the policy may change slowly, using the regular approach for double learning may mean the networks are too similar. So, we use a minimum estimation instead. Where

Another side effect of this approach is that we favor safer, more stable learning since we give more value to states with lower variance estimation error.

-

Another key observation is that value estimates are coupled with policies so that value estimates diverge because of poor policies which become poorer due to inaccurate value functions.

The trick, therefore, is to update the policy network at a lower frequency than the value network, more specifically, only update when the value error is as small as possible. The updates become much slower but, theoretically, of higher quality.

-

Finally, we perform target policy smoothing. Since we can overfit on narrow peaks in the value function, we introduce a smoothing regularization strategy on the value function to reduce the variance caused by function approximation.

We fit the value of a small area around the target action

To bootstrap off of similar state-action value estimates.

In practice, we can approximate this by adding a random amount of noise to the target policy and averaging over minibatches.

This is to enforce the idea that similar actions should have similar values, akin to Expected SARSA.

-

Footnotes

-

Silver, et al. (2014) “Deterministic policy gradient algorithms.” ↩

-

Lillicrap, et al. (2019) Continuous Control with Deep Reinforcement Learning ↩

-

The authors make use of an Ornstein-Uhlenbeck process to generate temporally correlated exploration for exploration efficiency in physical control problems with inertia. ↩

-

Fujimoto, van Hoof, and Meger (2018) Addressing Function Approximation Error in Actor-Critic Methods ↩