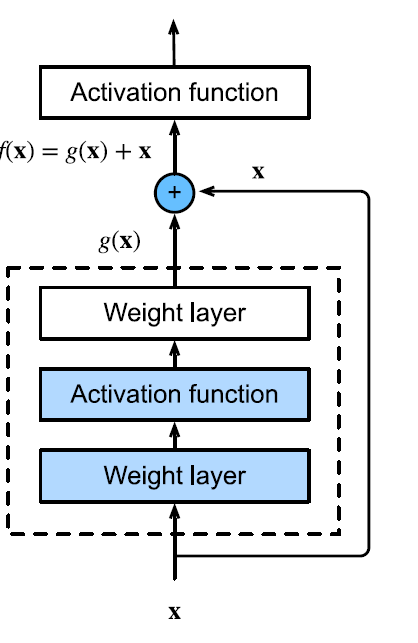

- A Residual Block is a kind of block whose output is the sum of the current output with the output of the previous layer and with activations applied later.

-

The motivation behind residual learning hinges on adding expressivity by having deeper layers more easily express the identity.

More formally, let

be the input of the current layer and the desired mapping to be learnt is Rather than learn

, we learn the residual . -

This procedure serves as another way to counteract problems with gradients

-

We introduce an inductive bias that our target function has the form

-

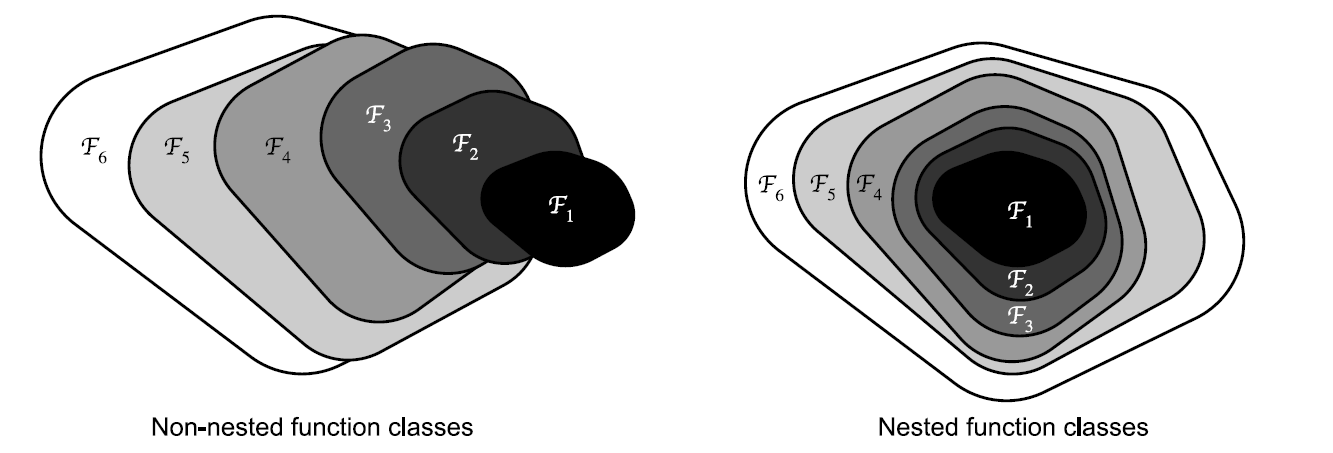

It enforces a nesting of function classes that the model is capable of learning