- MARL extension of PGM algorithms. They gradually vary the action probabilities within a learned policy.

IGA

-

We can perform Gradient Descent to update the policy towards maximizing the expected reward.

-

A variant is called infinitesimal gradient ascent (IGA) defined for two agents and two actions.

-

Assumption: Agents have knowledge of their reward matrix

-

In IGA, we update the gradient in an unconstrained manner and then project it so that it becomes a valid probability distribution.

-

IGA is not guaranteed to converge with a fixed learning rate. It will converge when we vary the learning rate and the overall step size is bounded. We follow the win or learn fast.

- If the agent is losing, it should try to adapt quickly (i.e., high learning rate)

- If the agent is winning it should adapt slowly since the other agent will adapt (i.e., low learning rate)

- Winning is determined by comparing the actual expected reward with the expected reward achieved by a Nash equilibrium policy.

- Mixed with IGA we call this WoLF-IGA and it is guaranteed to converge to a Nash Equilibrium in general-sum games with two agents and two actions.

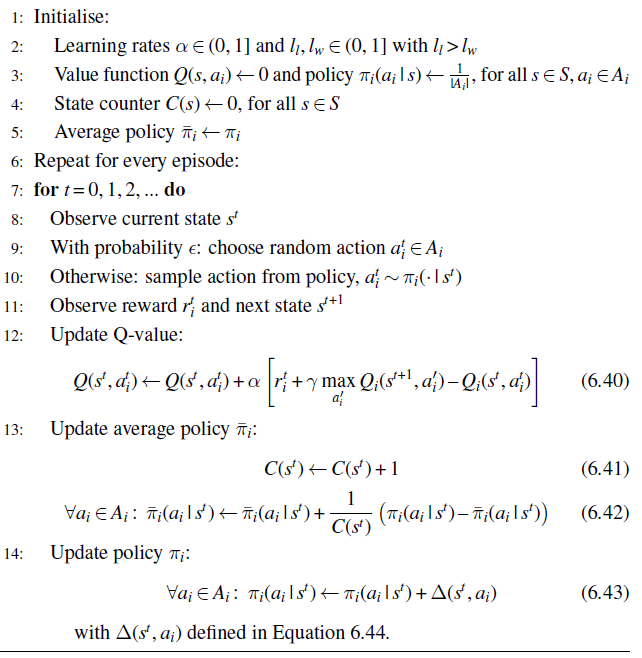

WoLF-PHC

- WoLF-IGA is generalized to WoLF with Policy Hill Climbing which is applicable for a general sum game with any number of agents and actions. It also removes the requirement of knowledge about the reward function or policy

- It learns

as in standard Q learning - It makes use of an average policy

computed over past policies. Rationale: As in Fictitious play, the average policy will converge to a Nash equilibrium (in most cases). are the learning rates for losing and winning.

- It learns

-

In the above,

determines how to move the policy closer to the greedy policy with respect to , calculated as moving probability mass from all non-greedy actions to the greedy actions. We have the following -

The minimum in the definition of

-

It improves upon fictitious learning by making the policy updates smoother 1

GIGA

-

Generalized Infinitesimal Gradient Ascent (GIGA) generalizes IGA to normal-form games with more than two agents and actions such that we achieve no-regret.

-

Assumption: The past actions of other agents can be observed.

-

Like IGA, we update using the unconstrained gradient, however we use the gradient in terms of the actual rewards after observing the past actions of other agents.

-

The expected reward for an agent

The update rule then becomes the following, using step size

-

GIGA achieves no-regret if all agents use a step size of

Where

- This implies that the empirical action distribution of agents using GIGA converges to coarse-correlated equilibrium

Links

-

Albrecht, Christianos, and Schafer - Ch 6.4

- 6.4.5 - Contains a discussion of GIGA for the case of two player games.