-

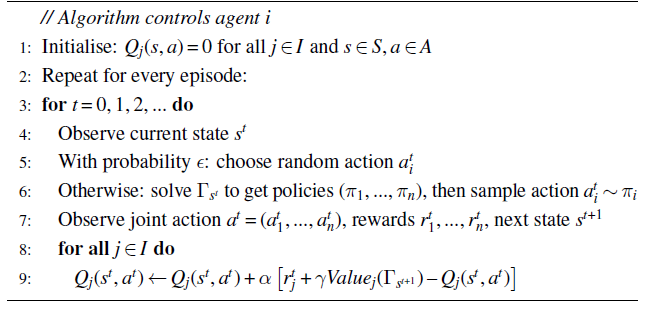

Joint Action Learning uses Temporal Difference Learning to learn estimates of the joint-action value models to estimate the expected returns

-

Here we use something similar to

-learning but for joint action -

JAL-GT is a variant of this where we frame the problem as a Static game

with the reward function being given as. - Learning

then becomes discovering the right game to play

- Learning

- We solve

using a game theoretic solution concept to obtain the solution — a policy from which - Minimax Q-Learning is guaranteed to learn the unique minimax value of the stochastic game under the assumption of all joint actions and states being tried infinitely often.

- Policies trained under Minimax Q-Learning do not necessarily exploit weaknesses to opponents when they exist.

- At the same time, Minimax policies are robust to exploitation by assuming a worst-case opponent during training

- Nash Q-Learning is guaranteed to learn a Nash equilibrium and is applicable to general-sum games but it is very restrictive

- It requires that all joint actions and states being tried infinitely often, and all normal-form games either

- Have a global optimum where each agent individually achieves its maximum possible return ; or

- Have a saddle point in which if any agent deviates, then all other agents will receive a higher expected return.

- These assumptions bypass the equilibrium selection problem since in either a global optimum or a saddle point, the expected return of each agent is the same.

- Implicitly, we have that agents consistently choose either global optima or saddle points.

- It requires that all joint actions and states being tried infinitely often, and all normal-form games either

- Correlated Q-Learning uses a correlated equilibrium but we need to modify the algorithm so that agents sample from correlated equilibrium

instead - This has an advantage over Nash Q-Learning

- It explores more solutions with potentially higher expected returns

- It can be computed efficiently with Linear Programming

- Convergence to a correlated equilibrium is not guaranteed.

- Equilibrium selection becomes a concern, however this can be mitigated using a protocol to consistently select an equilibrium (i.e., highest welfare)

- This has an advantage over Nash Q-Learning

- Minimax Q-Learning is guaranteed to learn the unique minimax value of the stochastic game under the assumption of all joint actions and states being tried infinitely often.

Limitations

-

JAL is not applicable to certain games since information in the joint action value model

is insufficient to construct an equilibrium policy. The limitations come in finding a deterministic equilibrium but for a game where many stochastic equilibria exist instead. -

A stationary equilibrium is one which is not conditioned on the state

rather than the history . s -

No Stationary Deterministic Equilibrium Theorem (Zinkevich, Greenwald, and Litman (2005) )

Let

- Specific action probabilities may not be computable using

functions alone